What is Ramp Testing? – Continuously raising an input signal until the system breaks down.

What is Depth Testing? – A test that exercises a feature of a product in full detail.

What is Quality Policy? – The overall intentions and direction of an organization as regards quality as formally expressed by top management.

What is Race Condition? – A cause of concurrency problems. Multiple accesses to a shared resource, at least one of which is a write, with no mechanism used by either to moderate simultaneous access.

What is Emulator? – A device, computer program, or system that accepts the same inputs and produces the same outputs as a given system.

What is Dependency Testing? – Examines an application’s requirements for pre-existing software, initial states and configuration in order to maintain proper functionality.

What is Documentation testing? – The aim of this testing is to help in preparation of the cover documentation (User guide, Installation guide, etc.) in as simple, precise and true way as possible.

What is Code style testing? – This type of testing involves the code check-up for accordance with development standards: the rules of code comments use; variables, classes, functions naming; the maximum line length; separation symbols order; tabling terms on a new line, etc. There are special tools for code style testing automation.

What is scripted testing? – Scripted testing means that test cases are to be developed before tests execution and some results (and/or system reaction) are expected to be shown. These test cases can be designed by one (usually more experienced) specialist and performed by another tester.

Random Software Testing Terms and Definitions:

• Formal Testing: Performed by test engineers

• Informal Testing: Performed by the developers

• Manual Testing: That part of software testing that requires human input, analysis, or evaluation.

• Automated Testing: Software testing that utilizes a variety of tools to automate the testing process. Automated testing still requires a skilled quality assurance professional with knowledge of the automation tools and the software being tested to set up the test cases.

• Black box Testing: Testing software without any knowledge of the back-end of the system, structure or language of the module being tested. Black box test cases are written from a definitive source document, such as a specification or requirements document.

• White box Testing: Testing in which the software tester has knowledge of the back-end, structure and language of the software, or at least its purpose.

• Unit Testing: Unit testing is the process of testing a particular complied program, i.e., a window, a report, an interface, etc. independently as a stand-alone component/program. The types and degrees of unit tests can vary among modified and newly created programs. Unit testing is mostly performed by the programmers who are also responsible for the creation of the necessary unit test data.

• Incremental Testing: Incremental testing is partial testing of an incomplete product. The goal of incremental testing is to provide an early feedback to software developers.

• System Testing: System testing is a form of black box testing. The purpose of system testing is to validate an application’s accuracy and completeness in performing the functions as designed.

• Integration Testing: Testing two or more modules or functions together with the intent of finding interface defects between the modules/functions.

• System Integration Testing: Testing of software components that have been distributed across multiple platforms (e.g., client, web server, application server, and database server) to produce failures caused by system integration defects (i.e. defects involving distribution and back-office integration).

• Functional Testing: Verifying that a module functions as stated in the specification and establishing confidence that a program does what it is supposed to do.

• Parallel/Audit Testing: Testing where the user reconciles the output of the new system to the output of the current system to verify the new system performs the operations correctly.

• Usability Testing: Usability testing is testing for ‘user-friendliness’. A way to evaluate and measure how users interact with a software product or site. Tasks are given to users and observations are made.

• End-to-end Testing: Similar to system testing – testing a complete application in a situation that mimics real world use, such as interacting with a database, using network communication, or interacting with other hardware, application, or system.

• Security Testing: Testing of database and network software in order to keep company data and resources secure from mistaken/accidental users, hackers, and other malevolent attackers.

• Sanity Testing: Sanity testing is performed whenever cursory testing is sufficient to prove the application is functioning according to specifications. This level of testing is a subset of regression testing. It normally includes testing basic GUI functionality to demonstrate connectivity to the database, application servers, printers, etc.

• Regression Testing: Testing with the intent of determining if bug fixes have been successful and have not created any new problems.

• Acceptance Testing: Testing the system with the intent of confirming readiness of the product and customer acceptance. Also known as User Acceptance Testing.

• Installation Testing: Testing with the intent of determining if the product is compatible with a variety of platforms and how easily it installs.

• Recovery/Error Testing: Testing how well a system recovers from crashes, hardware failures, or other catastrophic problems.

• Adhoc Testing: Testing without a formal test plan or outside of a test plan. With some projects this type of testing is carried out as an addition to formal testing. Sometimes, if testing occurs very late in the development cycle, this will be the only kind of testing that can be performed – usually done by skilled testers. Sometimes ad hoc testing is referred to as exploratory testing.

• Configuration Testing: Testing to determine how well the product works with a broad range of hardware/peripheral equipment configurations as well as on different operating systems and software.

• Load Testing: Testing with the intent of determining how well the product handles competition for system resources. The competition may come in the form of network traffic, CPU utilization or memory allocation.

• Penetration Testing: Penetration testing is testing how well the system is protected against unauthorized internal or external access, or willful damage. This type of testing usually requires sophisticated testing techniques.

• Stress Testing: Testing done to evaluate the behavior when the system is pushed beyond the breaking point. The goal is to expose the weak links and to determine if the system manages to recover gracefully.

• Smoke Testing: A random test conducted before the delivery and after complete testing.

• Pilot Testing: Testing that involves the users just before actual release to ensure that users become familiar with the release contents and ultimately accept it. Typically involves many users, is conducted over a short period of time and is tightly controlled. (See beta testing)

• Performance Testing: Testing with the intent of determining how efficiently a product handles a variety of events. Automated test tools geared specifically to test and fine-tune performance are used most often for this type of testing.

• Exploratory Testing: Any testing in which the tester dynamically changes what they’re doing for test execution, based on information they learn as they’re executing their tests.

• Beta Testing: Testing after the product is code complete. Betas are often widely distributed or even distributed to the public at large.

• Gamma Testing: Gamma testing is testing of software that has all the required features, but it did not go through all the in-house quality checks.

• Mutation Testing: A method to determine to test thoroughness by measuring the extent to which the test cases can discriminate the program from slight variants of the program.

• Glass Box/Open Box Testing: Glass box testing is the same as white box testing. It is a testing approach that examines the application’s program structure, and derives test cases from the application’s program logic.

• Compatibility Testing: Testing used to determine whether other system software components such as browsers, utilities, and competing software will conflict with the software being tested.

•

Comparison Testing: Testing that compares software weaknesses and strengths to those of competitors’ products.

• Alpha Testing: Testing after code is mostly complete or contains most of the functionality and prior to reaching customers. Sometimes a selected group of users are involved. More often this testing will be performed in-house or by an outside testing firm in close cooperation with the software engineering department.

• Independent Verification and Validation (IV&V): The process of exercising software with the intent of ensuring that the software system meets its requirements and user expectations and doesn’t fail in an unacceptable manner. The individual or group doing this work is not part of the group or organization that developed the software.

• Closed Box Testing: Closed box testing is same as black box testing. A type of testing that considers only the functionality of the application.

• Bottom-up Testing: Bottom-up testing is a technique for integration testing. A test engineer creates and uses test drivers for components that have not yet been developed, because, with bottom-up testing, low-level components are tested first. The objective of bottom-up testing is to call low-level components first, for testing purposes.

• Bug: A software bug may be defined as a coding error that causes an unexpected defect, fault or flaw. In other words, if a program does not perform as intended, it is most likely a bug.

• Error: A mismatch between the program and its specification is an error in the program.

• Defect: Defect is the variance from a desired product attribute (it can be a wrong, missing or extra data). It can be of two types – Defect from the product or a variance from customer/user expectations. It is a flaw in the software system and has no impact until it affects the user/customer and operational system. 90% of all the defects can be caused by process problems.

• Failure: A defect that causes an error in operation or negatively impacts a user/ customer.

• Quality Assurance: Is oriented towards preventing defects. Quality Assurance ensures all parties concerned with the project adhere to the process and procedures, standards and templates and test readiness reviews.

• Quality Control: quality control or quality engineering is a set of measures taken to ensure that defective products or services are not produced, and that the design meets performance requirements.

• Verification: Verification ensures the product is designed to deliver all functionality to the customer; it typically involves reviews and meetings to evaluate documents, plans, code, requirements and specifications; this can be done with checklists, issues lists, walkthroughs and inspection meetings.

• Validation: Validation ensures that functionality, as defined in requirements, is the intended behavior of the product; validation typically involves actual testing and takes place after verification are completed.

Testing Levels and Types

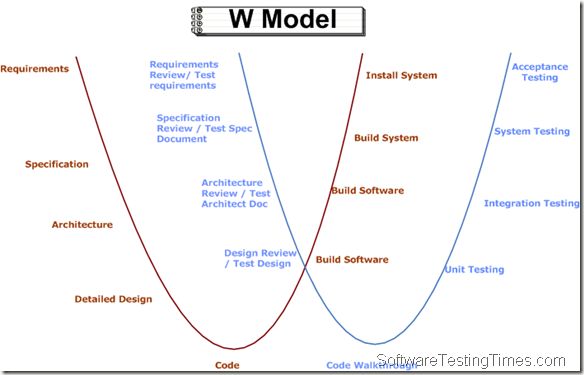

There are basically three levels of testing i.e. Unit Testing, Integration Testing and System Testing.

Various types of testing come under these levels.

Unit Testing: To verify a single program or a section of a single program.

Integration Testing: To verify interaction between system components

Prerequisite: unit testing completed on all components that compose a system

System Testing: To verify and validate behaviors of the entire system against the original system objectives

Software testing is a process that identifies the correctness, completeness, and quality of software.

Recent Comments