T

est Case is a commonly used term for a specific test. This is usually the smallest unit of testing. A Test Case will consist of information such as requirements testing, test steps, verification steps, prerequisites, outputs, test environment, etc.

A Test Case is:

– A set of inputs, execution preconditions, and expected outcomes developed for a particular objective, such as to exercise a particular program path or to verify compliance with a specific requirement.

– A detailed procedure that fully tests a feature or an aspect of a feature. Whereas the test plan describes what to test, a test case describes how to perform a particular test. You need to develop a test case for each test listed in the test plan.

Test cases should be written by a team member who understands the function or technology being tested, and each test case should be submitted for peer review.

Organizations take a variety of approaches to documenting test cases; these range from developing detailed, recipe-like steps to writing general descriptions. In detailed test cases, the steps describe exactly how to perform the test. In descriptive test cases, the tester decides at the time of the test how to perform the test and what data to use.

Detailed test cases are recommended to test a software because determining pass or fail criteria is usually easier with this type of case. In addition, detailed test cases are reproducible and are easier to automate than descriptive test cases. This is particularly important if you plan to compare the results of tests over time, such as when you are optimizing configurations. Detailed test cases are more time-consuming to develop and maintain. On the other hand, test cases that are open to interpretation are not repeatable and can require debugging, consuming time that would be better spent on testing.

When planning your tests, remember that it is not feasible to test everything. Instead of trying to test every combination, prioritize your testing so that you perform the most important tests — those that focus on areas that present the greatest risk or have the greatest probability of occurring — first.

Once the Test Lead prepared the Test Plan, the role of individual testers will start from the preparation of Test Cases for each level in the Software Testing like Unit Testing, Integration Testing, System Testing and User Acceptance Testing and for each Module.

General Guidelines to Prepare Test Cases

As a tester, the best way to determine the compliance of the software to requirements is by designing effective test cases that provide a thorough test of a unit. Various test case design techniques enable the testers to develop effective test cases. Besides, implementing the design techniques, every tester needs to keep in mind general guidelines that will aid in test case design:

a. The purpose of each test case is to run the test in the simplest way possible. [Suitable techniques – Specification derived tests, Equivalence partitioning]

b. Concentrate initially on positive testing i.e. the test case should show that the software does what it is intended to do. [Suitable techniques – Specification derived tests, Equivalence partitioning, State-transition testing]

c. Existing test cases should be enhanced and further test cases should be designed to show that the software does not do anything that it is not specified to do i.e. Negative Testing [Suitable techniques – Error guessing, Boundary value analysis, Internal boundary value testing, State transition testing]

d. Where appropriate, test cases should be designed to address issues such as performance, safety requirements and security requirements [Suitable techniques – Specification derived tests]

e. Further test cases can then be added to the unit test specification to achieve specific test coverage objectives. Once coverage tests have been designed, the test procedure can be developed and the tests executed [Suitable techniques – Branch testing, Condition testing, Data definition-use testing, State-transition testing]

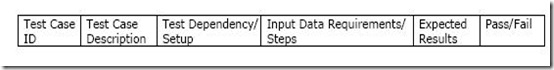

Test Case Template

To prepare these Test Cases each organization uses their own standard template, an ideal template is providing below to prepare Test Cases.

Fig 1: Common Columns in Test cases that are present in all Test case formats

Fig 2: A very details Low Level Test Case format

The Name of this Test Case Document itself follows some name convention like below so that by seeing the name we can identify the Project Name and Version Number and Date of Release.

DTC_Functionality Name_Project Name_Ver No

DTC – Detailed Test Case

Functionality Name: For which the test cases is developed

Project Name: Name of the Project

Ver No: Version number of Software

(You can add Release Date also)

The bolded words should be replaced with the actual Project Name, Version Number and Release Date. For eg. Bugzilla Test Cases 1.2.0.3 01_12_04

On the Top-Left Corner we have company emblem and we will fill the details like Project ID, Project Name, Author of Test Cases, Version Number, Date of Creation and Date of Release in this Template.

And we will maintain the fields Test Case ID, Requirement Number, Version Number, Type of Test Case, Test Case Name, Action, Expected Result, Cycle#1, Cycle #2, Cycle#3, Cycle#4 for each Test Case. Again this Cycle is divided into Actual Result, Status, Bug ID and Remarks.

Test Case ID:

To Design the Test Case ID also we are following a standard: If a test case belongs to application not specifically related to a particular Module then we will start them as TC001, if we are expecting more than one expected result for the same test case then we will name it as TC001.1. If a test case is related to Module then we will name it as M01TC001, and if a module is having a sub-module then we name that as M01SM01TC001. So that we can easily identify to which Module and which sub-module it belongs to. And one more advantage of this convention is we can easily add new test cases without changing all Test Case Number so it is limited to that module only.

Requirement Number:

It gives the reference of Requirement Number in SRS/FRD for Test Case. For Test Case we will specify to which Requirement it belongs to. The advantage of maintaining this one here in Test Case Document is in future if a requirement will get change then we can easily estimate how many test cases will affect if we change the corresponding Requirement.

Version Number:

Under this column we will specify the Version Number, in which that particular test case was introduced. So that we can identify finally how many Test Cases are there for each Version.

Type of Test Case:

It provides the List of different type of Test Cases like GUI, Functionality, Regression, Security, System, User Acceptance, Load, Performance etc., which are included in the Test Plan. So while designing Test Cases we can select one of this option. The main objective of this column is we can predict totally how many GUI or Functionality test cases are there in each Module. Based on this we can estimate the resources.

Test Case Name:

This gives more specific name like particular Button or text box name, for which that particular Test Case belongs to. I mean to say we will specify the Object name for which it belongs to. For eg., OK button, Login form.

Action (Input):

This is very important part in Test Case because it gives the clear picture what you are doing on the specific object. We can say the navigation for this Test Case. Based the steps we have written here we will perform the operations on the actual application.

Expected Result:

This is the result of the above action. It specifies what the specification or user expects from that particular action. It should be clear and for each expectation we will sub-divide that Test Case. So that we can specify pass or fail criteria for each expectation.

Up to the above steps we will prepare the Test Case Document before seeing the actual application and based on System Requirement Specification/Functional Requirement Document and Use Cases. After that we will send this document to the concerned Test Lead for approval. He will review this document for coverage of all user Requirements in the Test Cases. After that he approved the Document.

Now we are ready for testing with this Document and we will wait for the Actual Application. Now we will use the Cycle #1 parts.

Under each Cycle#1 we are having Actual, Status, Bug ID and Remarks.

Number of Cycles is based on the Organization. Some organizations document Three Cycles some organizations maintain the information for Four Cycles.

But here I provided only one Cycle in this Template but you have to add more cycles based on your requirement.

Actual:

We will test the actual application against each Test Case and if it matches the Expected result then we will say it as “As Expected” else we will write the actually what happened after doing those action.

Status:

It simply indicates Pass or Fail status of that particular Test Case. If Actual and Expected both mismatch then the Status is Fail else it is Pass. For Passed Test Cases Bug ID should be null and for failed Test Cases Bug ID should be Bug ID in the Bug Report corresponding to that Test Case.

Bug ID:

This is gives the reference of Bug Number in Bug Report. So that Developer/Tester can easily identify the Bug associated with that Test Case.

Remarks:

This part is optional. This is used for some extra information.

Recent Comments