Testing with Visual Studio Team System 2010 (VS 2010) – Part 2

- Unit testing in which you call a class and verify that it is behaving as expected;

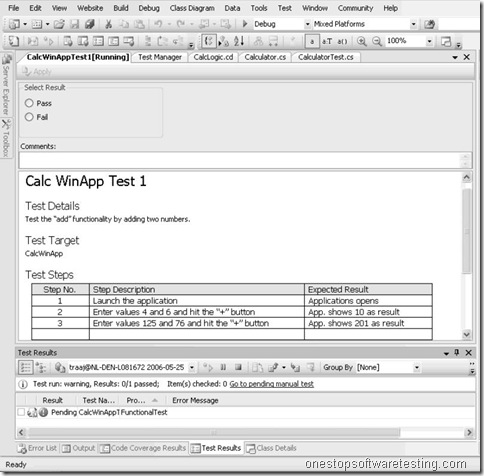

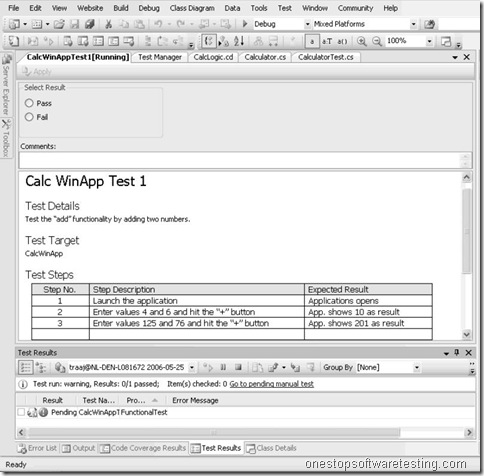

- Manual testing;

- Generic testing that uses an existing test application that run as part of the biggest test;

- Web testing to ensure the html apps function correctly

- Load testing to ensure the app is scalable.

VSTS (Visual Studio Team System) improves the workflow by allowing the developers and testers to store their tests and results in one place. This allows a project manager to run a report to see how many tests have passed or failed and determine where the problems are, because the information is all in one data warehouse.

With VSTS (Visual Studio Team System), testing capabilities have been integrated right into the VS environment, simplifying the process of writing tests without flipping back and forth between applications. VSTS supports testing as a part of an automated build system.

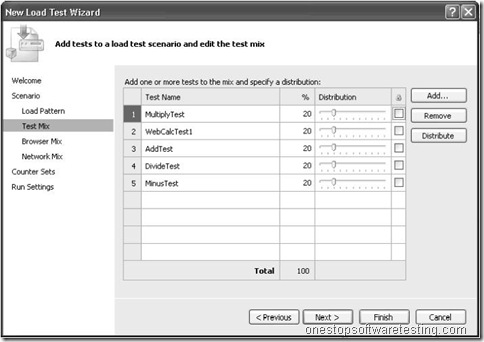

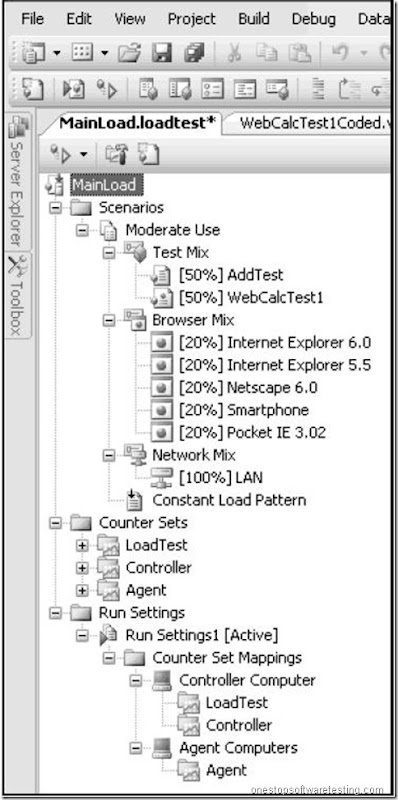

VSTS also allows a developer to aggregate multiple tests, which have already been written, so that they can be executed by automated test agents to simulate up to a thousand users for load testing. Several agents can be run concurrently to increase the load in multiples of about a thousand. This whole process allows a team to reuse the work that was initiated for the various kinds of tests.

This also makes it easier for the developers themselves to execute a load test based on the unit tests of the individual code modules, so that they can identify problems at an earlier stage, saving time, and learning how to write better code.

VSTS automated test code generation capabilities can save a developer two to three minutes of work per method.

Another key advantage is the Test List feature for managing tests in hierarchical categories that can be shared across projects. There are two grouping mechanisms for the tests, using projects and folders, using Test Lists. Test Lists makes it easier to manage the tests in batches. If you have twenty tests in one project and twenty in another, you can include them by clicking on one group of text boxes and reorganize your groups very easily.

VSTS and TDD

In TDD the test always comes first, while in unit testing this is not always the case. The basic idea of TDD is: Test a little, code a little, refactor a little – in that sequence! The rhythm for the entire sequence is seconds or minutes. With TDD the test always comes first, while with “unit testing” in the broadest sense this is not clear. Generating tests after-the-fact is not considered to be TDD.

On the other hand, unit testing after the code is developed is not necessarily bad, it just does not serve the goals of TDD. Some testing is always better than no testing. However, software that is implemented using a test-driven approach, if done properly, will result in simpler systems, which are easier to understand, easier to maintain and with a near-zero-defect quality.

VSTS plug-ins

Compuware DevPartner and TestPartner, provide interactive feedback, coding advice and corporate coding standards and enable developers to find and repair software problems. This software now plugs into Studio Team System (VSTS) to make detailed diagnostic data available to the developer.

Among other third-parties supporting VSTS list AutomatedQA with TestComplete and Borland with CaliberRM.

Recent Comments