Simplified – Shift Left in Software Testing

– Article by Sumit Kalra

Shift Left is a buzzword in Software Testing. It is not new, in fact it has always been around. Shift left is all about creating a culture where testers can be involved early in the software development life cycle to start testing activities early. Idea is to reduce the risks.

Perhaps inspired by the maxim, “a stitch in time saves nine”, Shift Left is a practical attempt to actually ensure a timely stitch; to check for errors in the software testing process earlier than the conventional time to do so. Shift Left testing means testing earlier in the software development cycle, so that risks and unknowns can be reduced which enables smooth deliveries to the clients.

Performing testing activities late in the cycle results into – failure at managing the evolving demands and requirements, and as such soon produces unhealthy consequences for the organization ranging from higher production costs to extended sales time, and accidental defects.

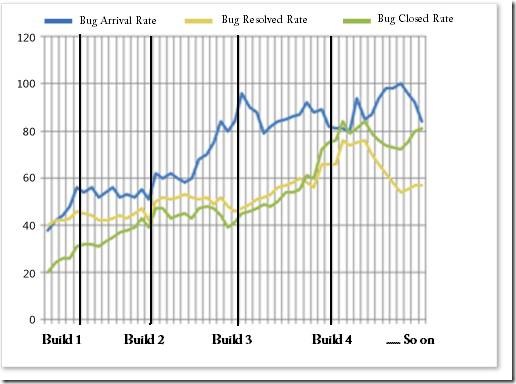

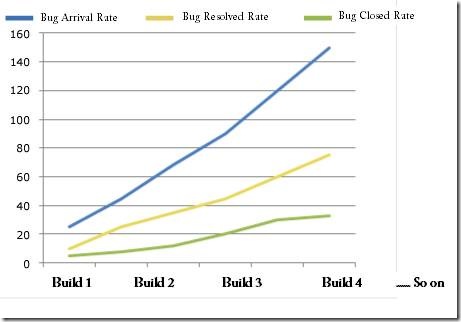

Studies put forward explicitly where it is indicated that cost of fixing bugs late in the software development life cycle is very high. A must Read – http://softwaretestingtimes.com/2010/04/why-start-testing-early.html

When Shift Left practices are put in SDLC, the software system testing activities takes place much earlier in software development life cycle. The goal is to fix any minor looming issues that might crop up in the future of the project, and thereby meeting the marks of quality in delivery. So when organizations adopt the Shift Left strategy, they are able to test, analyze project, pass judgment on the system and refine it into something much better bit-by-bit.

Few Examples of Shift Left:

- Pair with the developers – More Collaboration and brainstorming on the requirements / test scenarios with the Team (including Devs and PO) so that unknowns and risks can be discovered earlier in the phase. Both Devs and QEs will have the same understanding on the requirements.

- Rework (Issue fixing & retesting) will be less.

- Scope creep will be less.

- Test different layers – In SOA applications, APIs are developed first. Team should plan the API testing so that issues can be identified early in the cycle (rather than just testing from the UI).

- Plan Non-functional Testing early in the cycle (Performance Testing, Security Testing etc) – Identifies issues early and reduces the risks.

- Automate the “Automation Test Case Execution” – Integrate the automation scripts with Jenkins/any Build automation tools that automation scripts should run in CI region before the new code deployment. It will help in ensuring that new code change is safe or not.

- Automate Unit Tests, Integration tests, API Tests – These tests runs faster and help in identifying the defects early in the cycle.

Advantages of Shifting Left:

- Faster delivery – Accelerate the release cycles – Ensure smooth deliveries.

By carrying out a Shift Left operation, it is possible to efficiently improve the speed of delivery of the project. This is because they get to find out all the flaws quickly enough in the development phase of the software, reduce the time interval between releases while making the necessary adjustments, and finally producing a refined and quality software. A well-calculated procedure can in fact lead to the quality development and timely completion of tasks. The idea of Quality Assurance is the eventual improvement of these procedures after their development and documentation.

- Software Development free of Hassles

All the project requirements become clear to every member of the organization when the errors are detected at an earlier time in the requirement phase.

- Meeting Customer Demands sufficiently

Shift Left technique improves your client satisfaction mark, as the approach enables you deliver faster and more quality tasks.

- Rework is reduced – Defects caused by humans to be minimized.

- Time can be saved and team can focus more on Automation Testing.

- Risk is reduced – Cut-down complications that could surface in the production phase.

To Summarize, Shift Left is a step towards:

Delivering QUALITY @ SPEED

Reduce the COST without cutting the RESOURCES

Here is an awesome video (less than 5 minute) to understand the Shift Left concept –

— Article by Sumit Kalra

Recent Comments