- Software testing provides visibility into product and process quality in terms of current performance measurement and to use as historical data for future estimations and quality assurance.

| Sl. No | Sample Testing Reliability Matrix Name | Purpose | Artifact Template |

| 1 | QA Ledger-Script ID’s & Release plan | To keep track of the test script id allocated for use cases/modules and release wise plan for future use. | |

| 2 | Condition ID’s- Master Inventory | To keep track of the test condition ids allocated for individual use case/module release wise for future use. | |

| 3 | Script ID’s-Master Inventory | To keep track of the test script ids allocated for individual use case/module release wise for future use. | |

| 4 | Test Script | Contains several test cases of a single use case/module (sometimes multiple) with reference to test condition ids. | |

| 5 | Test Criteria | Contains the test script id and test condition id along with the prerequisite data needed for testing and data validation. | |

| 6 | Masters Conditions Inventory | It comprises all the test conditions descriptions with test condition id and script id for all use case/module. | |

| 7 | Repository Matrix | It comprises the count of test script and test condition ids for all the use cases/module with a focus on the particular release system testing and acceptance testing. | |

| 8 | Test Execution Dashboard IR Dashboard |

Test Execution Dashboard gives the detail about the testing status and their results. Dashboard-IR (Incidence Reports) gives the detail about the defects raised during various phases of testing life cycle. |

2.4.2.1 Testing Characterization:

Once the business requirements are written, methods/processes for ensuring that the system contains the functionality specified must be developed.

Steps to evaluate testing during and after the requirements gathering phase:

1. To validate the requirements, test plans are written that contain multiple test cases; each test case is based on one system state and tests some functions that are based on a related set of requirements.

2. In the total set of test cases, each requirement must be tested at least once, and some requirements will be tested several times because they are involved in multiple system states in varying scenarios and in different ways.

3. It is important to ensure that each requirement is adequately, but not excessively, tested.

4. In some cases, the requirements can be grouped together using criticality to mission success as their common thread; these must be extensively tested.

5. In other cases, requirements can be identified as low criticality; if a problem occurs, their functionality does not affect mission success while still achieving successful testing.

2.4.2.2 Test Coverage

The main objective is to verify that each business requirement will be tested; the implication is that if the software passes the test, the business requirement’s functionality is successfully included in the system. This is done by determining that each requirement is linked to at least one test case.

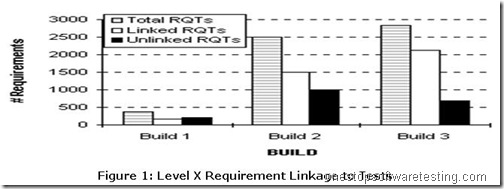

For example a query such as those shown below would result in data that could be displayed in a graph shown in Figure 1 for different builds:

Query 1: How many requirements in Level X Build 1 are linked to a test case?

2.4.2.3 Test Span

This activity characterizes the test plan and identifies potentially insufficient or excess testing. Requirements are usually tested by more than one test case, and one test case usually covers more than one requirement. Since each test costs money and takes time, the obvious questions are how many requirements are covered by one test, and how many tests cover only one requirement. On the other hand, if requirements are insufficiently tested, functionality may not be verified.

The metrics for this analysis are in two parts because of the bi-directional linkage between the requirements and tests. Each direction yields different information. Counting the number of unique tests used for a requirement indicates that requirements at both ends of the graph may have too much or too little testing. Counting the number of unique requirements tested indicates the exclusivity of the testing. Figure 2 shows an expected profile of unique requirements per test case.

This graph shows that if there is an expectation that there will be a large number of requirements tested by only one test case, and that there will be some number of requirements that will be tested by a multiple number of test cases. It is expected that the upper bound of multiple test cases will range in the tens. This makes sense, as more complicated requirements may require different test cases to thoroughly verify all aspects of the requirement. However, there is a limit on the number of test cases. As the number of test cases increases the difficulty of verifying the requirement also increases. This difficulty arises due to the complication in data analysis, understanding the results of the multiple tests cases, and understanding the impact of multiple test case results on the verification of the requirement. Number of tests per requirements counts the number of unique tests associated with each test. A program query such as the one below might be used for different tests conducted:

Query 1: How many requirements are tested by Test A.1? (Acceptance test, Test1)

2.4.2.4 Test Complexity

The objective of an effective verification program is to ensure that every requirement is tested, the implication being that if the system passes the test, the requirement’s functionality is included in the delivered system. An assessment of the traceability of the requirements to test cases is needed. It is expected that a requirement will be linked to a test case, and may well be linked to more that one test case as shown in Figure 3.

The important aspect of this analysis is to determine which requirements have not been linked to any test cases at all.

Go to Part 1 – Metric Based Approach for Requirements Gathering and Testing

Recent Comments